Contents of an Evaluation Plan

Contents of an Evaluation Plan

Permalink:

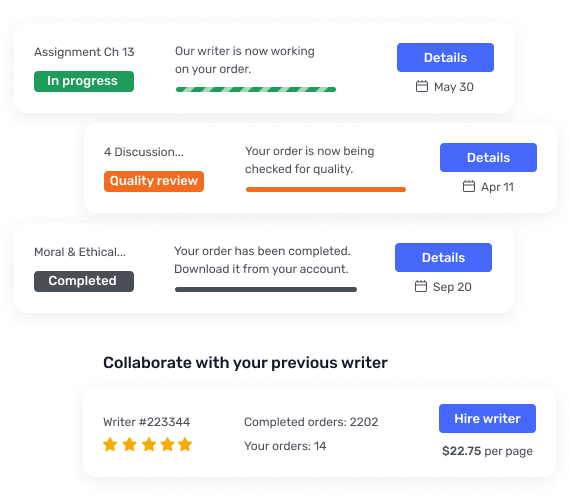

Develop an evaluation plan to ensure your program evaluations are carried out efficiently in the future. Note that bankers or funders may want or benefit from a copy of this plan.

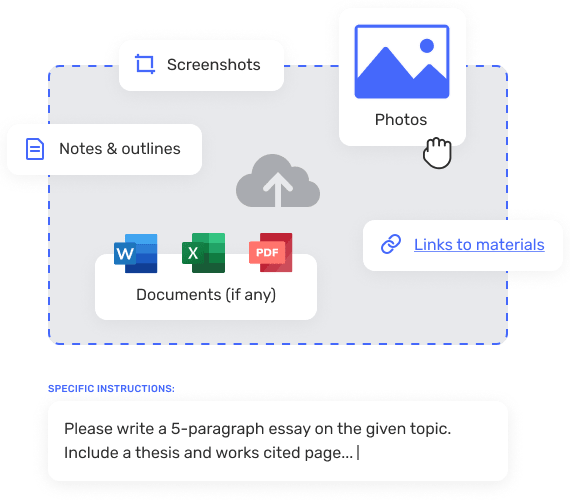

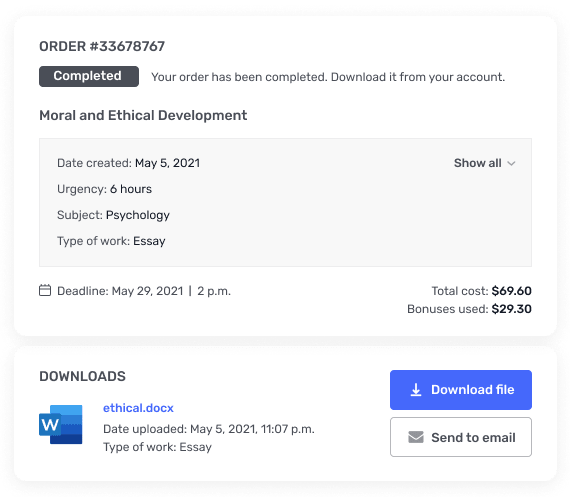

Ensure your evaluation plan is documented so you can regularly and efficiently carry out your evaluation activities. Record enough information in the plan so that someone outside of the organization can understand what you’re evaluating and how. Consider the following format for your report: 1. Title Page (name of the organization that is being, or has a product/service/program that is being, evaluated; date) 2. Table of Contents 3. Executive Summary (one-page, concise overview of findings and recommendations) 4. Purpose of the Report (what type of evaluation(s) was conducted, what decisions are being aided by the findings of the evaluation, who is making the decision, etc.) 5. Background About Organization and Product/Service/Program that is being evaluated a) Organization Description/History b) Product/Service/Program Description (that is being evaluated) i) Problem Statement (in the case of nonprofits, description of the community need that is being met by the product/service/program) ii) Overall Goal(s) of Product/Service/Program iii) Outcomes (or client/customer impacts) and Performance Measures (that can be measured as indicators toward the outcomes) iv) Activities/Technologies of the Product/Service/Program (general description of how the product/service/program is developed and delivered) v) Staffing (description of the number of personnel and roles in the organization that are relevant to developing and delivering the product/service/program) 6) Overall Evaluation Goals (eg, what questions are being answered by the evaluation) 7) Methodology a) Types of data/information that were collected b) How data/information were collected (what instruments were used, etc.) c) How data/information were analyzed d) Limitations of the evaluation (eg, cautions about findings/conclusions and how to use the findings/conclusions, etc.) 8) Interpretations and Conclusions (from analysis of the data/information) 9) Recommendations (regarding the decisions that must be made about the product/service/program) Appendices: content of the appendices depends on the goals of the evaluation report, eg.: a) Instruments used to collect data/information b) Data, eg, in tabular format, etc. c) Testimonials, comments made by users of the product/service/program d) Case studies of users of the product/service/program e) Any related literature

Pitfalls to Avoid

1. Don’t balk at evaluation because it seems far too “scientific.” It’s not. Usually the first 20% of effort will generate the first 80% of the plan, and this is far better than nothing. 2. There is no “perfect” evaluation design. Don’t worry about the plan being perfect. It’s far more important to do something, than to wait until every last detail has been tested. 3. Work hard to include some interviews in your evaluation methods. Questionnaires don’t capture “the story,” and the story is usually the most powerful depiction of the benefits of your services. 4. Don’t interview just the successes. You’ll learn a great deal about the program by understanding its failures, dropouts, etc. 5. Don’t throw away evaluation results once a report has been generated. Results don’t take up much room, and they can provide precious information later when trying to understand changes in the program.